The tree-building algorithm makes the best split at the root node where there are the largest number of records, and considerable information. Each subsequent split has a smaller and less representative population with which to work. Towards the end, idiosyncrasies of training records at a particular node display patterns that are peculiar only to those records.

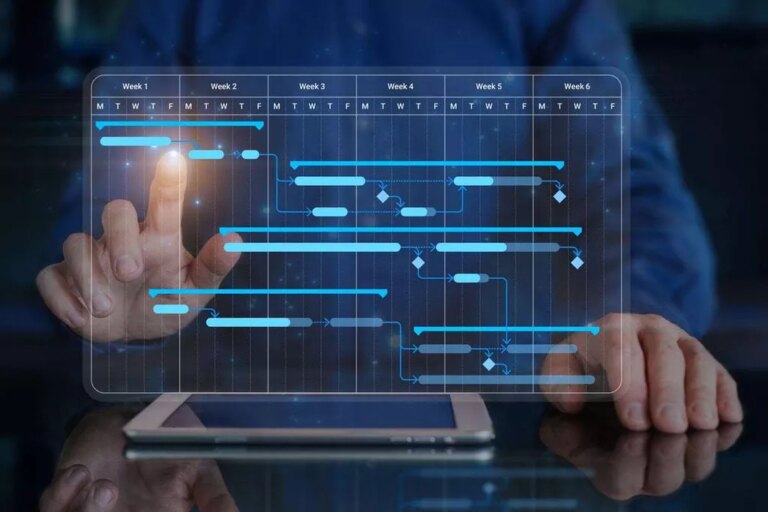

Figure 3 shows how a decision tree can be used for classification with two predictor variables. We build decision trees using a heuristic called recursive partitioning. This approach is also commonly known as divide and conquer because it splits the data into subsets, which then split repeatedly into even smaller subsets, and so on and so forth. The process stops when the algorithm determines the data within the subsets are sufficiently homogenous or have met another stopping criterion.

10. Classification tree algorithms for ordinal outcome variable

A properly pruned tree will restore generality to the classification process. Regression analysis could be used to predict the price of a house in Colorado, which is plotted on a graph. The regression model can predict housing prices in the coming years using data points of what prices have been in previous years. This relationship is a linear regression since housing prices are expected to continue rising.

- By

using this type of decision tree model, researchers can

identify the combinations of factors that constitute the

highest (or lowest) risk for a condition of interest. - These two Bayesian approaches have differences such as the stopping rule of the simulation algorithm or convergence diagnostic plots, criteria for identifying good trees and prior distributions considered for parameters in the terminal nodes [88, 96, 98].

- However, it sacrifices some priority for creating pure children which can lead to additional splits that are not present with other metrics.

- Since the tree is grown from the Training Set, when it has reaches full structure it usually suffers from over-fitting (i.e., it is explaining random elements of the Training Data that are not likely to be features of the larger population of data).

- Decision tree learning is a supervised learning approach used in statistics, data mining and machine learning.

OML (O’Leary, Mengersen, Low Choy), 2008, proposed a Bayesian approach for CART model by extending the Bayesian approach of DMS. These two Bayesian approaches have differences such as the stopping rule of the simulation algorithm or convergence diagnostic plots, criteria for identifying good trees and prior distributions considered for parameters in the terminal nodes [88, 96, 98]. what is classification tree method We use the analysis of risk factors related to major

depressive disorder (MDD) in a four-year cohort

study

[17]

to illustrate the building of a decision tree

Second Example: Add a numerical Variable

model. The goal of the analysis was to identify the most

important risk factors from a pool of 17 potential risk

factors, including gender, age, smoking, hypertension,

education, employment, life events, and so forth.

As the branches get longer, there are fewer independent variables available because the rest have already been used further up the branch. The splitting stops when the best p-value is not below the specific threshold. The leaf tree nodes of the tree are tree nodes that did not have any splits, with p-values below the specific threshold, or all independent variables are used.

An alternative to limiting tree growth is pruning using k-fold cross-validation. First, we build a reference tree on the entire data set and allow this tree to grow as large as possible. Next, we divide the input data set into training and test sets in k different ways to generate different trees. We evaluate each tree on the test set as a function of size, choose the smallest size that meets our requirements and prune the reference tree to this size by sequentially dropping the nodes that contribute least. One way of modelling constraints is using the refinement mechanism in the classification tree method.

The accuracy of each rule is then evaluated to determine the order

in which they should be applied. Pruning is done by removing a rule’s

precondition if the accuracy of the rule improves without it. For instance, in the example below, decision trees learn from data to

approximate a sine curve with a set of if-then-else decision rules. The deeper

the tree, the more complex the decision rules and the fitter the model. In decision analysis, a decision tree can be used to visually and explicitly represent decisions and decision making.

Now we can calculate the information gain achieved by splitting on the windy feature. Classification trees are a hierarchical way of partitioning the space. We start with the entire space and recursively divide it into smaller regions. The service-composition approaches tend to offer the most flexible interaction to users and Hourglass [16] is an example of a non-semantic-based solution.

These aspects form the input and output data space of the test object. A patient organisation (Depressie Vereniging, Amersfoort, Netherlands) was involved in the design of study [37], development of prevention strategies for relapse, participant recruitment, and in discussing the interpretation of the results. An independent medical ethics committee for all included sites (METIGG) approved the DRD trial protocol.

Classification Tree Analysis (CTA) is an analytical procedure that takes examples of known classes (i.e., training data) and constructs a decision tree based on measured attributes such as reflectance. In essence, the algorithm iteratively selects the attribute (such as reflectance band) and value that can split a set of samples into two groups, minimizing the variability within each subgroup while maximizing the contrast between the groups. In a classification tree, the data set splits according to its variables.